Video generated by NotebookLM

Below is the video’s transcript which was fully generated by NotebookLM from human-provided resources

An LLM Doesn’t “Think”

What if the biggest threat to modern education is also its greatest opportunity. Let’s take a look at the rise of artificial intelligence in the classroom. It’s a tool that has a lot of educators, well, pretty worried, but it might just be the thing that pushes us toward a much, much deeper way of learning.

Okay, so here’s how we’re going to break this down. We’ll start with the problem everyone’s talking about.

Then we’ll pull back the curtain on the tech itself.

From there, we’ll get into a whole new way of thinking about AI that could really transform how we teach and how we learn.

So, first things first, just how big is this challenge? I mean, right now, classrooms everywhere are trying to figure out what to do with a tool that feels incredibly powerful and honestly deeply unsettling. It’s creating this weird new dynamic between students and teachers.

92% of Students Use AI

Yeah. Saying that AI use is widespread doesn’t even begin to cover it. A recent survey found that a staggering 92% of British undergrads are already using these AI tools. It’s not some niche thing anymore. It’s a campus habit, you know, as common as scrolling through your phone. And this is exactly what’s creating that shadowy zone of mistrust we’re talking about.

And this quote just it nails the core fear, doesn’t it? The big concern is that AI lets students hand in work that looks like they’ve learned something, but they’ve completely bypassed the messy, the difficult, and frankly the essential process of actually learning. Because let’s be honest, the process is the whole point.

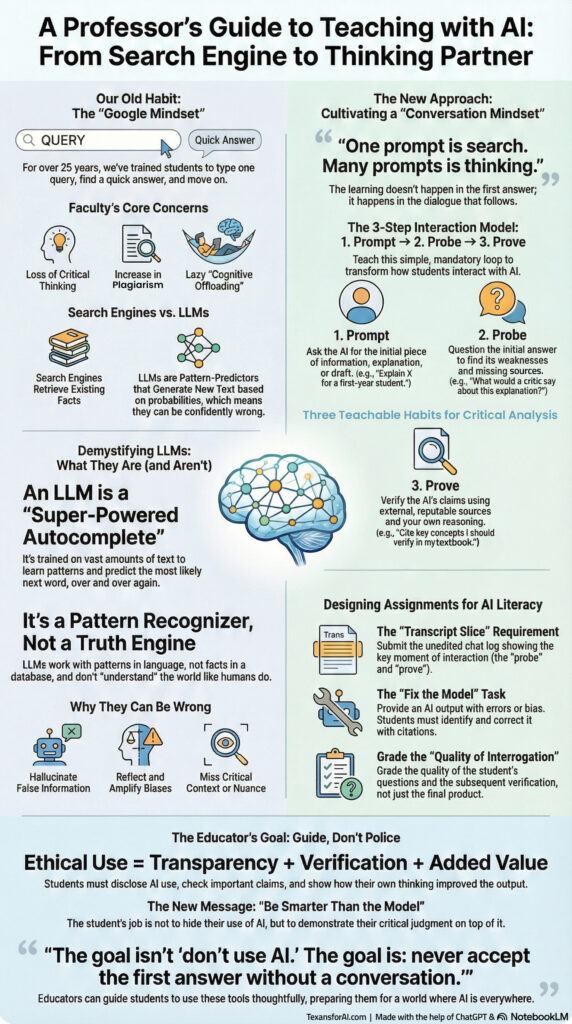

Demystifying LLMs

So to really get a handle on this fear, we first have to understand what this technology is and just as importantly, what it isn’t. So let’s demystify these things called large language models or LLMs. Okay, this is the most crucial part to get. An LLM doesn’t think. It doesn’t understand truth the way a person does. It is at its core a ridiculously sophisticated pattern matching machine. It’s been fed a library bigger than you can imagine, and its entire job is to just guess the next most probable word over and over until it spits out a sentence that looks right. And when you think of it that way, everything changes. Look, it’s an amazing pattern recognizer, a fantastic language generator for creating drafts and options, but it is not a truth machine. It has no moral compass, and it is absolutely 100% not a replacement for human judgment. Just because it sounds fluent doesn’t mean it’s accurate.

How are we supposed to use them?

So, if these LLMs aren’t truth machines, how are we supposed to use them? Well, the answer is in a huge mindset shift. We have to move away from search and start thinking in terms of conversation. This one little change, it’s a total game changer. This idea right here is the key to everything. For the last 20 plus years, we’ve all been trained in what you could call the Google mindset. You know, you type one query, you click a link, you’re done. But using an LLM that way is just, well, it’s a literacy problem. the real learning, the thinking, it doesn’t happen in that first answer. It happens in the back and forth.

Simple Three-Step Loop Framework

And we can actually put this into practice with a super simple three-step loop. The PPP Framework: Prompt, Probe, and Prove. So, you start by asking the model for something. That’s the prompt. But then, and this is the most important part, you probe its answer. You question it. You poke holes in it. And finally, you prove it. You check its claims against real sources or your own critical thinking. Now, this is where it gets really interesting because we can turn that simple loop into actual concrete habits that build real critical thinking. This is where we go from just talking about it to actually doing it. Think of these as your new rules for talking to an AI. Rule one, and this is a big one, never ever stop at the first answer.

Rule two, always follow up. Ask why it gave you that answer. Ask what if you changed the situation. And rule three, force it to compare things. make it argue for one idea over another. That forces you, the human, to be the judge. I mean, just look at a simple follow-up question like this one. This completely changes the dynamic, right? It forces the model to go back and reconsider its own work, and it pushes the student to start thinking about what a really good, sophisticated answer would even look like. You’re not just getting an answer anymore, you’re interrogating it.

So, this whole conversational approach, it doesn’t just change how students learn, it totally transforms how educators can teach. It lets them move away from being AI police and toward using it to get to deeper learning.

Move from Policing to Literacy

So instead of trying to ban AI, what if assignments looked like this? You require students to submit a transcript slice of their conversation with the AI. They have to show their prompts, what they took from the AI’s response. And this is the money shot, what they rejected or changed and why. It forces them to not just use the AI, but to actively wrestle with it, to question it, and to justify their own thinking. It makes their learning process visible. And that’s the whole point right there. The goal completely flips. The objective isn’t to produce a piece of writing from a blank page anymore. It’s to show your human judgment, your creativity, and your critical thinking on top of the AI’s first draft. Your value ad becomes the thing that gets graded.

The Future of Education

Okay, let’s zoom out one last time. What does all this mean for the future of education? Because if we really embrace this conversational model, AI stops being a threat and it becomes something else entirely. You know, it really helps to put AI in its historical place. It’s not some, you know, alien technology that just dropped out of the sky. It’s the latest in a long, long line of cultural tools like writing, the printing press, the internet that have always expanded what humans are capable of and forced us to adapt. And that right there is the real opportunity. This isn’t just about damage control or catching cheaters. It’s about using AI as a catalyst to unlock totally new levels of human creativity and insight. It’s about raising the bar for what we expect from our students and frankly what we expect from ourselves. So this is where we’re left. For generations, education has been about teaching people the answers. But we now live in a world of infinite answers. So the truly valuable human skill is asking the right questions. The answers are easy now. The questions, the questions are hard. So the challenge for all of us is, are we ready to start asking better ones?

End of Video Transcript

Author’s Note

These sources collectively offer a comprehensive analysis of the opportunities and challenges presented by the integration of Generative Artificial Intelligence (AI) in higher education globally. The first source presents a project brief aimed at helping higher-ed educators rethink Large Language Models (LLMs) not as cheating tools, but as pattern-based generators requiring human guidance, critical thinking, and ethical judgment. This theme is echoed in the “AI and the Future of Pedagogy” white paper, which explores how educators can wisely adopt AI to prevent the erosion of essential human skills like critical discernment and analytical reasoning, proposing assessment changes that move beyond a surveillance-based approach. The final report from the Global Consortium on AI and Higher Education for Workforce Development provides international case studies highlighting the urgent need for institutional policies, faculty upskilling, and stronger collaboration between academia and industry to bridge the digital skills gap and prepare students for an AI-enabled workforce. All sources emphasize that effective AI use must prioritize developing human-centric skills and ethical literacy over simple automation.

Another Video Version

Article Background

I’m in my doctoral program at Johns Hopkins School of Education. I’m focused right now on researching the use of generative AI in academic writing and its effect on “authorship” and “academic integrity.” Throughout my research, I consistently find data that highlights the gaps in students’ and faculty’s knowledge when leveraging generative AI tools.

My proposed study sits within a pragmatic mixed-methods perspective that responds to this gap. The quantitative strand will draw on post-positivist assumptions to map patterns in AI literacy and beliefs about acceptable AI use across a larger group of students and faculty, similar to the large-scale views offered by Chaudhry et al. (2023), Revell et al. (2024), and Sharma and Panja (2025). The qualitative strand will rest on interpretive assumptions and take up work like Guan and Han (2025) and Singh and Ngai (2024), treating authorship and originality as ideas that people actively construct in practice and in conversation with institutional expectations. This mixed-methods design offers a more complete approach, aligning with Donella Meadows’s reminder in Thinking in Systems: A Primer to “pay attention to what is important, not just what is quantifiable” (Meadows, 2008, p. 175). A pragmatic stance brings these strands together by asking a guiding question: What combination of methods will best help us understand how authorship is being reshaped in the era of generative AI, and how can that understanding inform practice in real classrooms and institutions?

Furthermore, from these studies, I can assume limitations will be ahead in my research due to the rapidly changing technological landscape. I will be researching and measuring a moving target – literally daily iteration and growth is taking place with these generative tools. There is also rising concern regarding the environmental impact of these massive generative tools and the data centers that drive them. How will these aspects detour, bias, or impact my research and results? Drawing on Meadows’ systems-thinking perspective, I will analyze leadership as a leverage point: when it is aligned with the goals of the whole, systems can evolve sustainably; when it remains unchanged, systems tend to replicate the same results (Meadows & Wright, 2008, p.145). She states:

From a systems point of view leadership is crucial because the most effective way you can intervene in a system is to shift its goals. You don’t need to fire everyone, or replace all the machinery, or spend more money, or even make new laws – if you can just change the goals of the feedback loops. Then all the old people, machinery, money, and laws will start serving new functions, falling into new configurations, behaving in new ways, and producing new results. (Meadows, n.d.)

So…., I went down a rabbit hole, researching this topic and decided to group a few resources together inside of NotebookLM and see what it could generate for me. I had heard recently that it could produce presentation decks and infographics (as seen above) because of the new version of Gemini 3. Well – it can also produce video content. That’s the two videos you can view in this article. You’ll see a few typos and some odd design choices in the video content – but overall, for a 34 min process, the assets it generated were very helpful and exciting to see.

References

Chaudhry, I. S., Sarwary, S. A. M., El Refae, G. A., & Chabchoub, H. (2023). Time to Revisit Existing Student’s Performance Evaluation Approach in Higher Education Sector in a New Era of ChatGPT — A Case Study. Cogent Education, 10(1), 2210461. https://doi.org/10.1080/2331186X.2023.2210461

Guan, Q., & Han, Y. (2025). From AI to authorship: Exploring the use of LLM detection tools for calling on “originality” of students in academic environments. Innovations in Education and Teaching International, 62(5), 1514–1528. https://doi.org/10.1080/14703297.2025.2511062

Meadows, D. H. (n.d.). The Question Of Leadership: A good leader sets the right goals, gets things moving, and helps us discover that we already know what to do. The Academy for Systems Change. Retrieved November 17, 2025, from https://donellameadows.org/archives/the-question-of-leadership-a-good-leader-sets-the-right-goals-gets-things-moving-and-helps-us-discover-that-we-already-know-what-to-do/

Meadows, D. H. (2008). Thinking in systems: A primer (Nachdr.). Chelsea Green Pub.

Revell, T., Yeadon, W., Cahilly-Bretzin, G., Clarke, I., Manning, G., Jones, J., Mulley, C., Pascual, R. J., Bradley, N., Thomas, D., & Leneghan, F. (2024). ChatGPT versus human essayists: An exploration of the impact of artificial intelligence for authorship and academic integrity in the humanities. International Journal for Educational Integrity, 20(1), 18. https://doi.org/10.1007/s40979-024-00161-8

Sharma, R. C., & Panja, S. K. (2025). Addressing Academic Dishonesty in Higher Education: A Systematic Review of Generative AI’s Impact. Open Praxis, 17(2). https://doi.org/10.55982/openpraxis.17.2.820Singh, R. G., & Ngai, C. S. B. (2024). Top-ranked U.S. and U.K.’s universities’ first responses to GenAI: Key themes, emotions, and pedagogical implications for teaching and learning. Discover Education, 3(1), 115. https://doi.org/10.1007/s44217-024-00211-w